The Evidence Approximation

Our linear regression model currently depends on the weights and on the hyperparameters (see prev. paragraphs). A fully Bayesian treatment would introduce prior distribution over all the parameters and hyperparameters, and calculate the predictive distribution by marginalization. Anyway, solving the integral of the marginalization on all these parameters is analytically intractable.

If we introduce two priors over (hyperpriors), then the predictive distribution is obtained by marginalizing over as follows:

Where is a likelihood function (given by 3.8) and is the posterior (the Gaussian with mean and covariance matrix ) and is a posterior for the hyperparameters.

An approximation, called Empirical Bayes, is given by:

- Obtaining the marginal likelihood by integrating over

- Maximizing the likelihood to obtain the hyperparameters

Another approximation can be used if the posterior is peaked around the values . In this case we just obtain the two values, replace them in the marginalization, and we marginalize over :

From Bayes theorem we know that:

If the prior is relatively flat, then can be obtained by maximizing the likelihood instead of the posterior .

But how do we compute the likelihood ? Let's marginalize over :

where

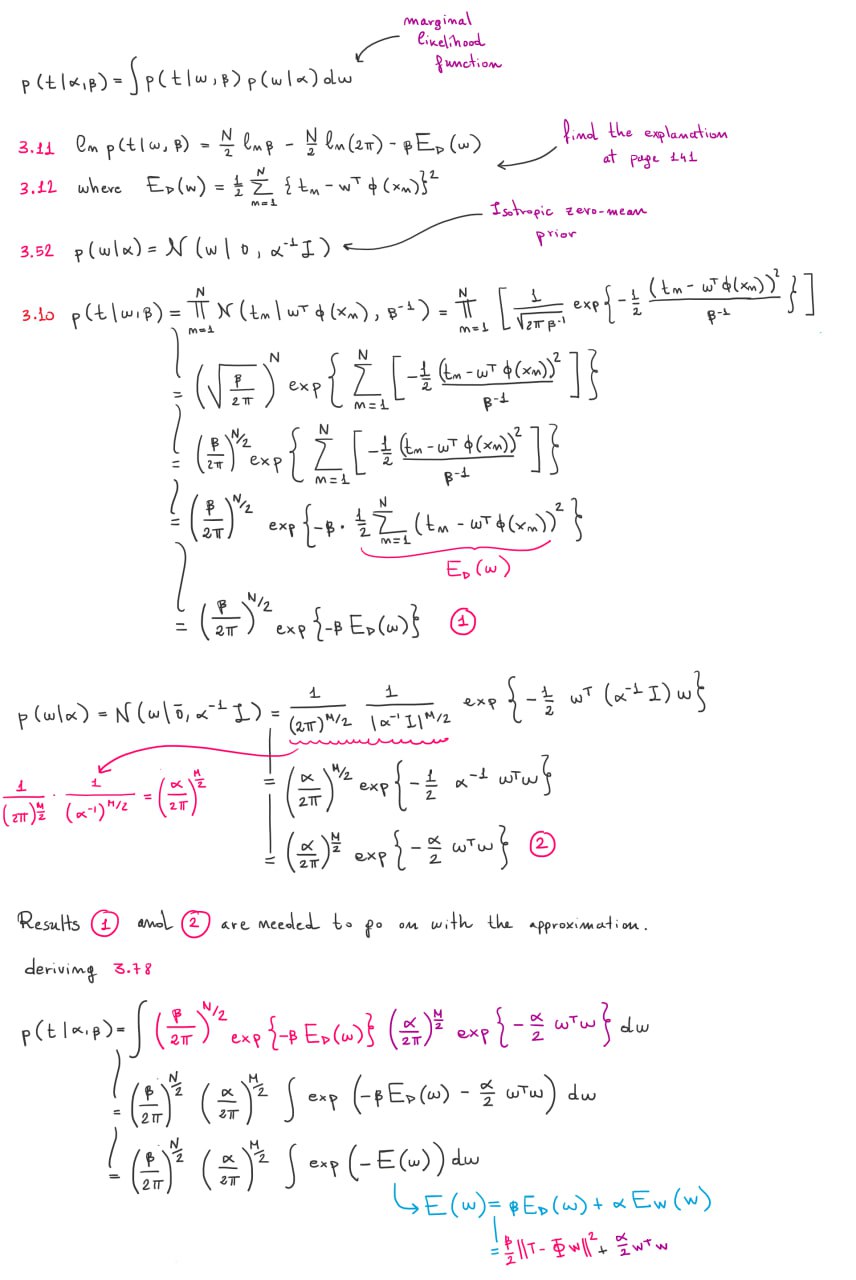

If you want to know the intermediate calculation denoted by , read the content of this image:

After this, the book does a little bit of magic. It defines:

- which is also (hessian of )

And then derives:

The steps are depicted in the online exercises solutions provided by the author:

There is a connection between this and the posterior distribution as we can see and .

We can now solve the integral inside the likelihood function :

Now we can replace in the likelihood formula:

And we can also calculate the log likelihood:

The book does an example with the polynomials as basis functions and shows how the likelihood prefers a trade-off between model accuracy and complexity, with lower values for high order polynomials.

Finding the hyperparameters

Now that we have obtained the likelihood expression , we want to maximize it for and .

Maximizing for

When we derive w.r.t. we only have to look at the term (click for more info). The eigenvalues of matrix have the form with . The derivative is: